Posted May 17th, 2010 by

rybolov

This was announced a couple of weeks ago (at least 9000 days ago in Internet time) so now it’s “old news” but have a look at Metricon 5.0 which will be in DC on the 10th of August.

It’s a small group (attendance is capped at 60), but if you’re managing security in Government, I want to encourage you to do 2 things:

- Submit a paper!

- Attend and learn.

I’ll be there doing a bit of hero-worship of my own with the Security Metrics folks.

Similar Posts:

Posted in Public Policy, Speaking |  1 Comment »

1 Comment »

Tags: government • infosec • infosharing • management • metrics • publicpolicy • security • speaking

Posted May 17th, 2010 by

rybolov

Having just finished our mini-semester class on InfoSec and Public Policy, I want to share with my old friend, the Interweblagosphere, a small process/framework for doing some analysis. This can be a paper, legislation, or even a basic guideline for developing metrics.

- Problemspace Definition: Give a point-of-view on a particular subject and why it is important. Thinking more conventionally, what is it exactly that is your thesis statement?

- History of Incident: prove the problem is worth time to solve. Usually this involves identifying a handful of large-scale incidents that can serve as the model for your analysis. Looking at these incidents, what worked and what didn’t work? Start to form some opinions. You will revisit these incidents later on as models.

- Regulatory Bodies: beginning of stakeholder definition. Identify responsible Government or industry-specific organizations and their history of dealing with the problem. What existing strategic plans and reports exist that you can use to feed your analysis.

- Private Sector Support: more stakeholders. How much responsibility does private industry have in this issue and what is the impact on them? They can be owners (critical infrastructure), vendors (hardware, software, firmware), maintainers, etc.

- Other Stakeholders: Consider end users, people who depend on the service that depends on the IT and the information therein.

- Trend and Metrics: what do we know about the topic given published metrics or our analysis of themes across our key incidents? If you notice a lack of metrics on the subject, what would be your “wish list” and what plan do you have for getting these metrics? For information security, this typically a huge gap–either we’re creating metrics to show where we’ve succeeded at the tactical level or we’re generating metrics with surveys which are notoriously flawed.

- Options and Alternatives Analysis: pros and cons, what evidence suggests each might succeed. Take your model incidents and run your options through them, would they help with each scenario? Gather up more incidents and see how the options would affect them. As you run through each option and scenario, consider each of the following:

- Efficacy of the Option–does it actually solve the root cause of the problem?

- Winner Stakeholders

- Loser Stakeholders

- Audit Burden

- Direct Costs

- Indirect Costs

- Build Strategic Plan and Recommendations: Based on your analysis of the situation (model incidents, metrics, and power dynamics), build recommendations from the high-performing options and form them into a strategic plan. The more specific you can get, the better.

Note that for the most part these are not exclusive to information security but to public policy analysis in general. There are a couple parts that need emphasis because of the immature nature of infosec.

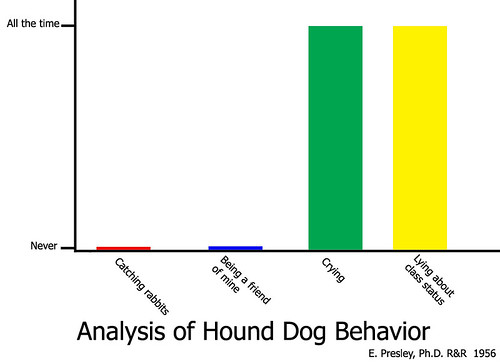

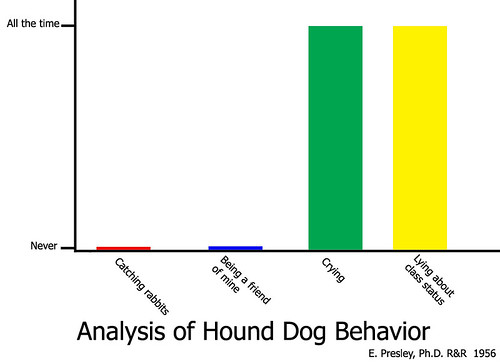

Analysis of Hound Dog Behavior graph by MShades. Our analysis is a little bit more in-depth. =)

Then the criteria for evaluating the strategic plan and the analysis leading up to it:

- Has an opinion

- Backs up the opinion by using facts

- Has models that are used to test the options

- Lays out a well-defined plan

As usual, I stand on the shoulders of giants, in this case my Favorite Govie provided quite a bit of input in the form of our joint syllabus.

Similar Posts:

Posted in Public Policy, What Works |  2 Comments »

2 Comments »

Tags: infosharing • legislation • publicpolicy • security

Posted April 7th, 2010 by

rybolov

Just a quick post to shill for Privacy Camp DC 2010 which will be taking place on the 17th of April in downtown DC. I went last year and it was much fun. The conversation ranged from recommendations for a rewrite of

The basic rundown of Privacy Camp is that it’s run like a Barcamp where the attendees are also the organizers and presenters. If you’re tired of going to death-by-powerpoint, this is the place for you. And it’s not just for government-types, there is a wide representation from non-profits and regular old commercial companies.

Anyway, what are you waiting for? Go sign up now.

Similar Posts:

Posted in Odds-n-Sods, Public Policy, The Guerilla CISO |  1 Comment »

1 Comment »

Tags: government • infosec • infosharing • law • legislation • management • privacy • publicpolicy • security

Posted April 1st, 2010 by

rybolov

Well, several funny things happened, they happen every week. But specifically I’m talking about the hearing in the House Committee on Homeland Security on FISMA reform–Federal Information Security: Current Challenges and Future Policy Considerations. If you’re in information security and Government, you need to go read through the prepared statements and even watch the hearing.

Also referenced is HR.4900 which was introduced by Representative Watson as a modification to FISMA. I also recommend that you have a look at it.

Now for my comments and rebuttals to the testimony:

- On the cost per sheet of FISMA compliance paper: If you buy into the State Department’s cost of $1700 per sheet, you’re absolutely daft. The cost of a security program divided by the total number of sheets of paper is probably right. In fact, if you do the security bits right, your cost per sheet will go up considerably because you’re doing much more security work while the volume of paperwork is reduced.

- Allocating budget for red teams: Do we really need penetration testing to prove that we have problems? In Mike Smith’s world, we’re just not there yet, and proving that we’re not there is just an excuse to throw the InfoSec practitioners under the bus when they’re not the people who created the situation in the first place.

- Gus Guissanie: This guy is awesome and knows his stuff. No, really, the guy is sharp.

- State Department Scanning: Hey, it almost seems like NIST has this in 800-53. Oh wait, they do, only it’s given the same precedence as everything else. More on this later.

- Technical Continuous Monitoring Tools: Does anybody else think that using products of FISMA (SCAP, CVE, CVSS) as evidence that FISMA is failing is a bit like dividing by zero? We really have to be careful of this or we’ll destroy the universe.

- Number of Detected Attacks and Incidents as a Metric: Um, this always gets a “WTF?” from me. Is the number increasing because we’re monitoring better or is it because we’re counting a whole bunch of small events as an attack (ie, IDS flagged on something), or is it because the amount of attacks are really increasing? I asked this almost 2 years ago and nobody has answered it yet.

- The Limitations of GAO: GAO are just auditors. Really, they depend on the agencies to not misrepresent facts and to give them an understanding of how their environment works. Auditing and independent assessment is not the answer here because it’s not a fraud problem, it’s a resources and workforce development problem.

- OMB Metrics: I hardly ever talk bad about OMB, but their metrics suck. Can you guys give me a call and I’ll give you some pointers? Or rather, check out what I’ve already said about federated patch and vulnerability management then give me a call.

So now for Rybolov’s plan to fix FISMA:

- You have to start with workforce management. This has been addressed numerous times and has a couple of different manifestations: DoDI 8570.10, contract clauses for levels of experience, role-based training, etc. Until you have an adequate supply of clueful people to match the demand, you will continue to get subpar performance.

- More testing will not help, it’s about execution. In the current culture, we believe that the more testing we do, the more likely the people being tested will be able to execute. This is highly wrong and I’ve commented on it before. I think that if it was really a fact of people being lazy or fraudulent then we would have fixed it by now. My theory is that the problem is that we have too many wonks who know the law but not the tech and not enough techs that know the law. In order to do the job, you need both. This is also where I deviate from the SANS/20 Critical Security Controls approach and the IGs that love it.

- Fix Plans of Actions and Milestones. These are supposed to be long-term/strategic problems, not the short-term/tactical application of patches–the tactical stuff should be automated. The reasoning is that you use these plans for budget requests for the following years.

- Fix the budget train. Right now the people with the budget (programs) are not the people running the IT and the security of it (CIO/CISO). I don’t know if the answer here is a larger dedicated budget for CISO’s staff or a larger “CISO Tax” on all program budgets. I could really policy-geek out on you here, just take my word for it that the people with the money are not the people protecting information and until you account for that, you will always have a problem.

Sights Around Capital Hill: Twice Sold Tales photo by brewbooks. Somehow seems fitting, I’ll let you figure out if there’s a connection. =)

Similar Posts:

Posted in FISMA, Public Policy, Rants, Risk Management |  7 Comments »

7 Comments »

Tags: accreditation • auditor • C&A • catalogofcontrols • certification • comments • compliance • fisma • gao • government • infosec • law • legislation • management • metrics • NIST • omb • publicpolicy • scalability • scap • security

Posted February 26th, 2010 by

rybolov

OK, so I lied unintentionally all those months ago when I said I wouldn’t write any more PCI-DSS posts.

My impetus for this blog post is a PCI-DSS panel at ShmooCon that several of my friends (Jack Daniel, Anton Chuvakin, Mike Dahn, and Josh Corman, in no particular order) were on. I know I’m probably the pot calling the kettle black, but the panel (as you would expect for any PCI-DSS discussion in the near future) rapidly disolved into chaos. So as I’m sitting in the audience watching @Myrcurial’s head pop off, I came to the realization that this is really 4 different conversations disguised into one topic:

- The Cost-Benefit Assessment of replacing credit card # and CVV2 with something else–maybe chip and pin, maybe something entirely different–and what responsibility does Visa and Mastercard have towards protecting their business. This calls for something more like an ROI approach because it’s infrastructure projects. Maybe this CBA has already been done but guess what–nobody has said anything about the result of that analysis.

- Merchants’ responsibility to protect their customers, their business, and each other. This is the usual PCI-DSS spiel. The public policy equivalent here is overfishing: everybody knows that if they come back with full nets and by-catch, they’re going to ruin the fishery long-term for themselves and their peers, but they can’t stop the destruction of the fishery by themselves, they need everybody in the community to do their part. In the same way, merchants not protecting card data mess over each other in this weird shared risk pool.

- Processor and bank responsibility. Typically this is the Tier-1 and Tier-2 guys. The issue here is that these guys are most of the processing infrastructure. What works in PCI-DSS for small merchants doesn’t scale up to match these guys, and that’s the story here: how do you make a framework that scales? I think it’s there (IE, the tiers and assurance levels in PCI-DSS) but it’s not communicated effectively.

- Since this is all a shared risk pool, at what places does it make sense to address particular risks? IE, what is the division of roles and responsibilities inside the “community”? Then how do you make a community standard that is at least reasonably fair to all the parties on this spectrum, Visa and MasterCard included?

PCI-DSS Tag Cloud photo by purpleslog.

There are a bunch of tangential questions, but they almost always circle

back to the 4 that I’ve mentioned above:

- Regulatory capture and service providers

- The pitfalls of designing a framework by committee

- Self-regulation v/s legislation and Government oversight

- Levels of hypocrisy in managing the “community”

- Effectiveness of specific controls

Now the problem as I see it is that each of these conversations points to a different conversation as a solution and in doing so, they become thought-terminating cliches. What this means is that when you do a panel, you’re bound to bounce between these 4 different themes without coming to any real resolution. Add to this the fact that it’s a completely irrational audience who only understand their 1 piece of the topic, and you have complete chaos when doing a panel or debate.

Folks, I know this is hard to hear, but as an industry, we need to get over being crybabies and pointing fingers when it comes PCI-DSS. The standard (or a future version of it anyway) and self-regulation is here to stay because even if we fix the core problems of payment, we’ll still have security problems because payment schemes are where the money is. The world as I see it is that the standards process needs to be more transparent and the people governed by the standard need a seat at the table with their rational, adult, and constructive arguments on what works and what doesn’t work to help them do their job to help themselves.

Similar Posts:

Posted in Public Policy, Rants |  1 Comment »

1 Comment »

Tags: pci-dss • publicpolicy • risk

Posted December 1st, 2009 by

rybolov

OK, so it’s been a couple of months of thinking about this thing. I threw together a rainbow-looking beast that now occupies my spare brain cycles.

Rybolov’s Information Security Management Model

And some peculiarities of the model that I’ve noticed:

Regulation, Compliance, and Governance flows from the top to the bottom. Technical solutions flow from the bottom to the top.

The Enterprise (Layer 4) gets the squeeze. But you CISOs out there knew that already, right? It makes much sense in the typical information security world to focus on layers 3, 4, and 5 because you don’t usually own the top and the bottom of the management stack.

The security game is changing because of legislation at layers 5 and 6. Think national data breach law. It seems like the trend lately is to throw legislation at the problems with information security. The scary part to me is that they’re trying to take concepts that work at layers 3 and 4 and scale them up the model with very mixed results because there isn’t anybody doing studies at what happens above the Enterprise. Seriously here, we’re making legislation based on analogies.

Typically each layer only knows about the layer above and below it. This is a serious problem when you have people at layers 5 and 6 trying to create solutions that carry down to layers 1 through 4.

At layers 1 and 2, you have the greatest chance to solve the root causes of security problems. The big question here is “How do we get the people working at these layers the support that they need?”

Similar Posts:

Posted in Public Policy |  7 Comments »

7 Comments »

Tags: government • infosec • legislation • management • publicpolicy • scalability • security

1 Comment »

1 Comment » Posts RSS

Posts RSS