Some Thoughts on POA&M Abuse

Posted June 8th, 2009 by rybolovAck, Plans of Action and Milestones. I love them and I hate them.

For those of you who “don’t habla Federali”, a POA&M is basically an IOU from the system owner to the accreditor that yes, we will fix something but for some reason we can’t do it right now. Usually these are findings from Security Test and Evaluation (ST&E) or Certification and Accreditation (C&A). In fact, some places I’ve worked, they won’t make new POA&Ms unless they’re traceable back to ST&E results.

Functions that a POA&M fulfills:

- Issue tracking to resolution

- Serves as a “risk register”

- Used as the justification for budget

- Generate mitigation metrics

- Can be used for data-mining to find common vulnerabilities across systems

But today, we’re going to talk about POA&M abuse. I’ve seen my fair share of this.

Conflicting Goals: The basic problem is that we want POA&Ms to satisfy too many conflicting functions. IE, if we use the number of open POA&Ms as a metric to determine if our system owners are doing their job and closing out issues but we also turn around and report these at an enterprise level to OMB or at the department level, then it’s a conflict of interest to get these closed as fast as possible, even if it means losing your ability to track things at the system level or to spend the time doing things that solve long-term security problems–our vulnerability/weakness/risk management process forces us into creating small, easily-to-satisfy POA&Ms instead of long-term projects.

Near-Term v/s Long-Term: If we set up POA&Ms with due dates of 30-60-90 (for high, moderate, and low risks) days, we don’t really have time at all to turn these POA&Ms into budget support. Well, if we manage the budget up to 3 years in advance and we have 90 days for high-risk findings, then that means we’ll have exactly 0 input into the budget from any POA&M unless we can delay the bugger for 2 years or so, much too long for it to actually be fixable.

Bad POA&Ms: Let’s face it, sometimes the one-for-one nature of ST&E, C&A, and risk assessment findings to POA&Ms means that you get POA&Ms that are “bad” and by that I mean that they can’t be satisfied or they’re not really something that you need to fix.

Some of the bad POA&Ms I’ve seen, these are paraphrased from the original:

- The solution uses {Microsoft|Sun|Oracle} products which has a history of vulnerabilities.

- The project team needs to tell the vendor to put IPV6 into their product roadmap

- The project team needs to implement X which is a common control provided at the enterprise level

- The System Owner and DAA have accepted this risk but we’re still turning it into a POA&M

- This is a common control that we really should handle at the enterprise level but we’re putting it on your POA&M list for a simple web application

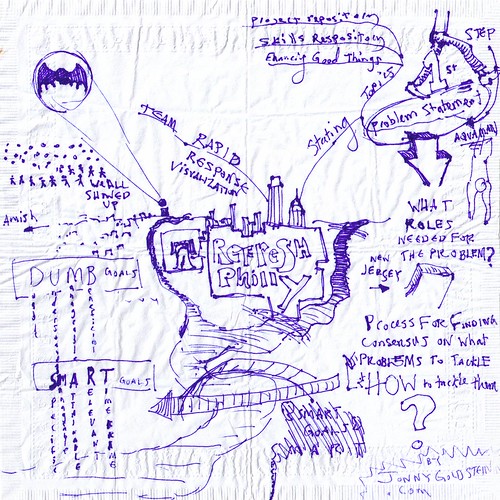

Plan of Action for Refresh Philly photo by jonny goldstein.

Keys to POA&M Nirvana: So over the years, I’ve observed some techniques for success in working with POA&Ms:

- Agree on the evidence/proof of POA&M closure when the POA&M is created

- Fix it before it becomes a POA&M

- Have a waiver or exception process that requires a cost-benefit-risk analysis

- Start with”high-level” POA&Ms and work down to more detailed POA&Ms as your security program matures

- POA&Ms are between the System Owner and the DAA, but the System Owner can turn around and negotiate a POA&M as a cedural with an outsourced IT provider

And then the keys to Building Good POA&Ms:

- Actionable–ie, they have something that you need to do

- Achievable–they can be accomplished

- Demonstrable–you can demonstrate that the POA&M has been satisfied

- Properly-Scoped–absorbed at the agency level, the common control level, or the system level

- They are SMART: Specific, Manageable, Attainable, Relevant, and within a specified Timeframe

- They are DUMB: Doable, Understandable, Manageable, and Beneficial

Yes, I stole the last 2 bullets from the picture above, but they make really good sense in a way that “know thyself” is awesome advice from the Oracle at Delphi.

Similar Posts:

Posted in BSOFH, FISMA |  No Comments »

No Comments »

Tags: accreditation • C&A • certification • compliance • fisma • government • infosec • management • metrics • risk • scalability • security

Posts RSS

Posts RSS